AI, Holocaust Distortion and Education

By Prof Victoria Grace Richardson-Walden

At a conference in Bucharest last week, our Lab Director Prof Victoria Grace Richardson-Walden presented our position on the extent to which we should be engaging with AI for the sake of Holocaust education.

I was invited by the US State Department to contribute to a panel called ‘Holocaust Denial and Distortion – New Challenges’, which focused on AI.

I wanted to use the opportunity to emphasise the need for more research-informed engagement in how the Holocaust museum and education sector, and policymakers, deal with AI (and indeed digital media more generally). I was joined on the panel by Historian Jason Steinhauer, Professor of International Law Aleksandra Gliszczyńska-Grabias, and Jordana Cutler from Meta, and it was chaired by Ellen Germain, US Special Envoy for Holocaust Issues (all pictured in our banner image).

Professor Victoria Grace Richardson-Walden speaking on the panel.

Key takeaways are:

What are we using AI for?

We need to ask ourselves why we want to engage with AI? The question should not simply be ‘what is AI good for in the context of Holocaust memory and education?’ but rather ‘What do we want to achieve in Holocaust memory and education, and what are the best tools to support this?’ (AI might be one of the answers, it might not be.)

Adopting this critical questioning is imperative because the development and maintenance of AI systems is resource intensive. Our research to-date has discovered that the majority Holocaust organisations, especially across Europe, do not have substantial inhouse digital personnel, or the infrastructure or funds to support such work.

Users play an active role in what AI systems produce: ‘garbage in = garbage out,’ as the old computer science adage goes. This means we need to provide AI literacies as part of Holocaust education.

The right representation and the right education

A more urgent approach would be to ask: how can the expertise in our sector feed into better informing publicly available AI systems? This might require lobbing if not enforcement by policymakers to ensure the right representation of experts is involvement in the provision and tagging of data, its training, and its supervision.

Users play an active role in what AI systems produce. ‘Garbage in = garbage out’ as the old computer science adage goes! This means we need to provide AI literacies (and indeed wider media literacies) as part of Holocaust education. I strongly highlighted how wrong it was that governments had for so long dismissed media studies as a ‘mickey mouse’ and ‘class C’ subject, when it’s the discipline most concentrated on doing this kind of critical work, and preparing citizens to be critically engaged in a (digitally) mediated world.

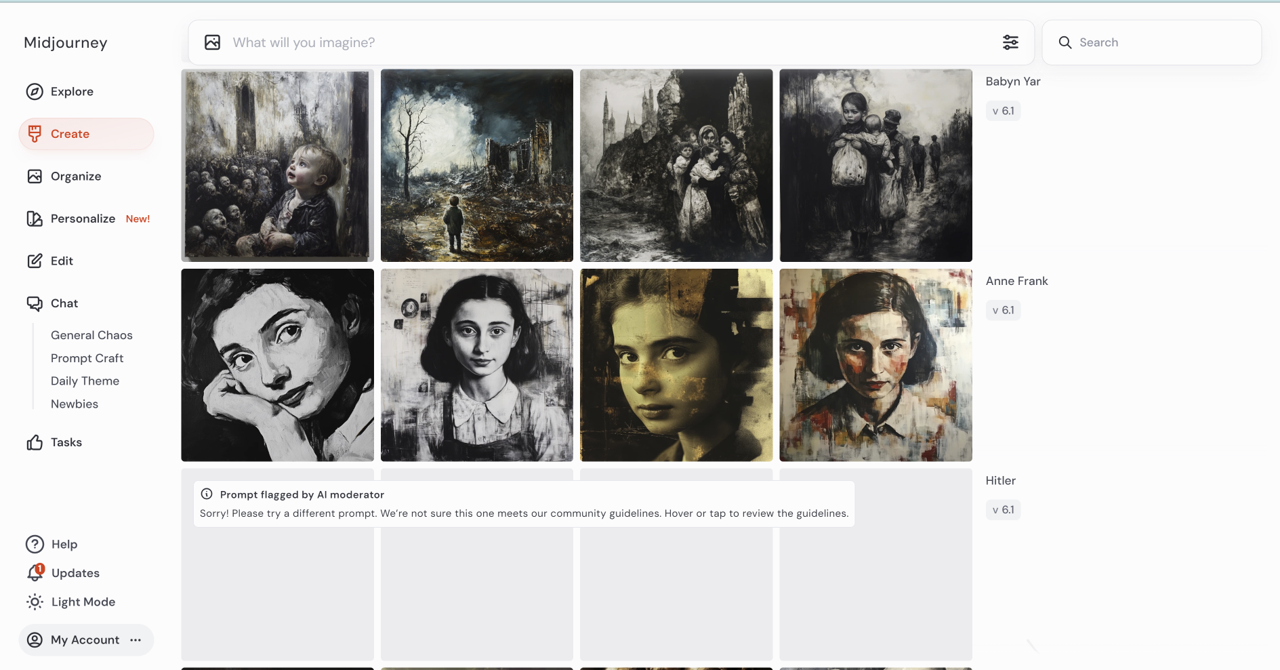

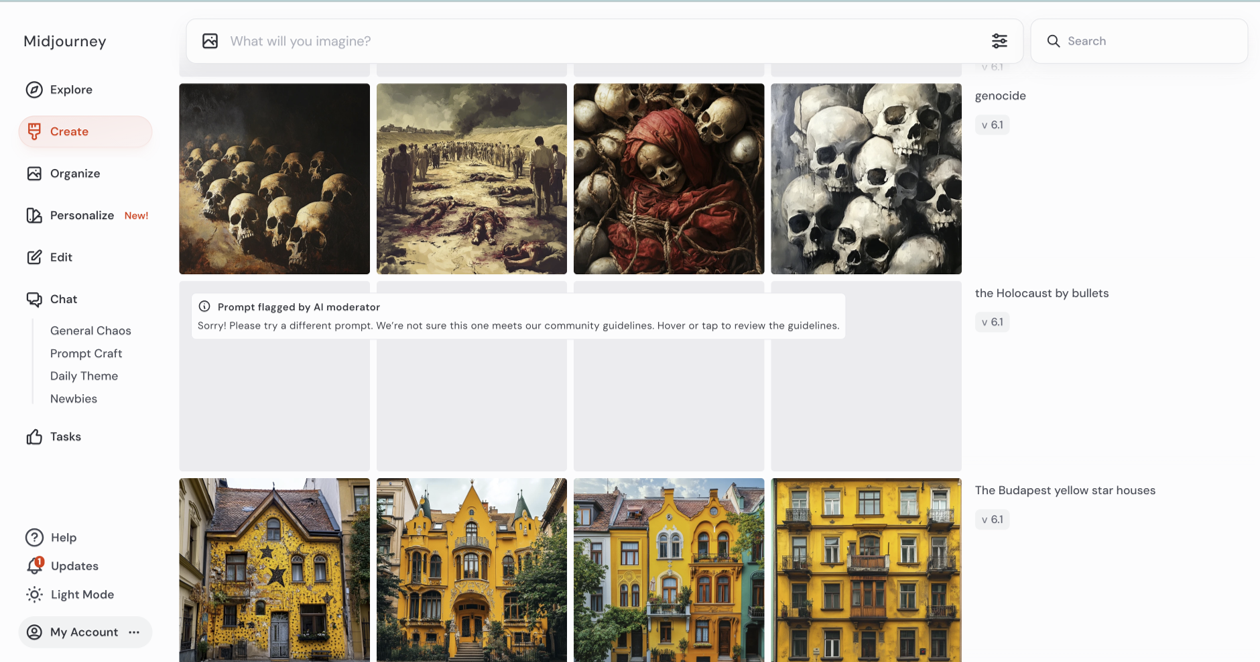

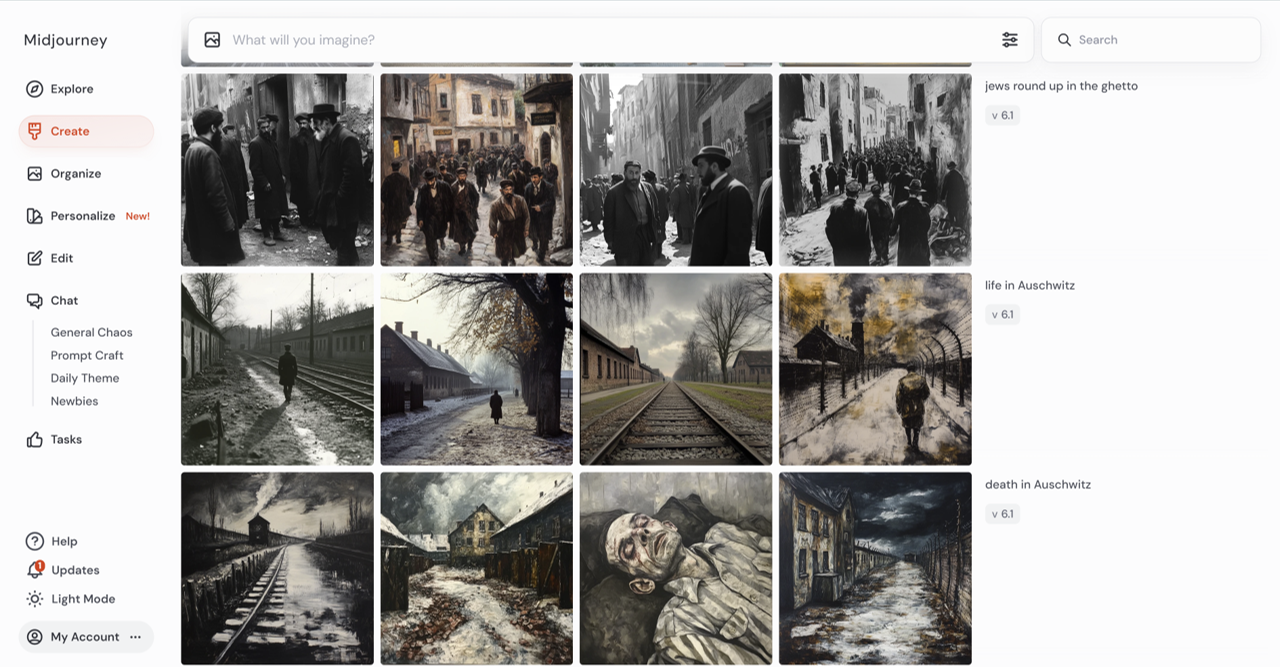

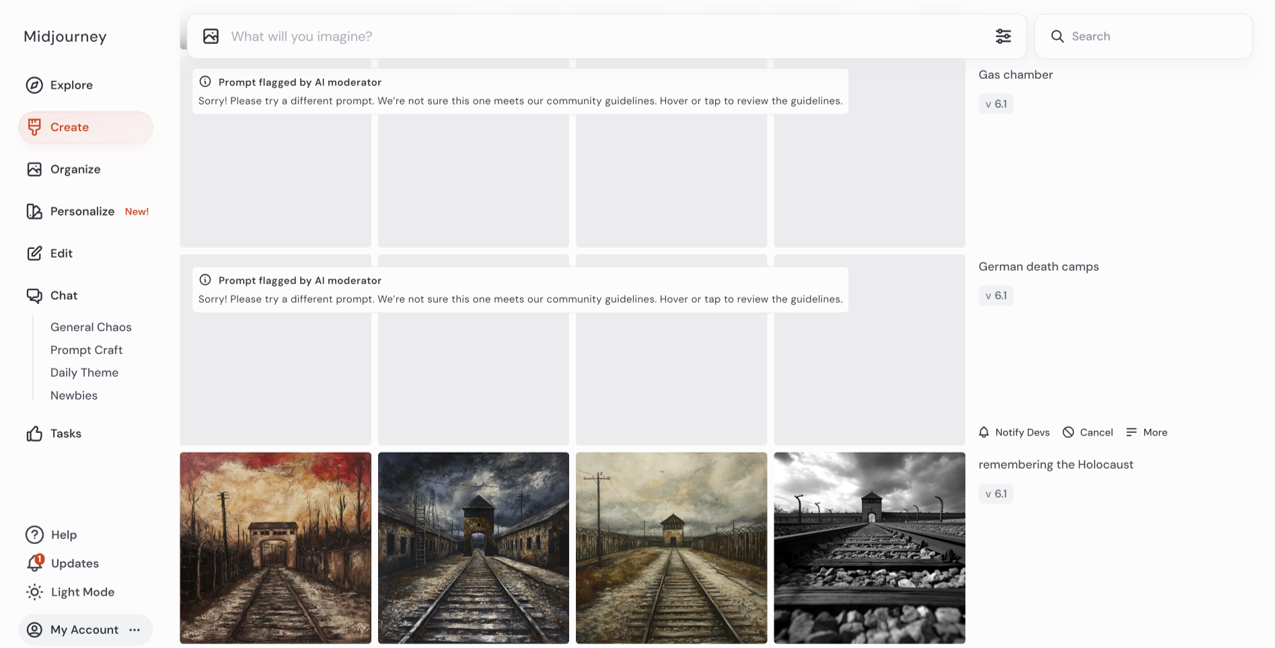

Existing guardrails may be too extreme

Commercially available AI systems have surprisingly high guardrails in place, especially image generators. This makes it somewhat difficult to produce Holocaust-related images with them.

On one hand, this comes as a relief – people can’t just ‘imagine’ the Holocaust. On the other hand, you can easily circumnavigate some of this censorship and then get questionable results and if these systems become a common part of people’s everyday lives and ‘the Holocaust’ is rendered invisible through them, then does this risk it fading from public consciousness?

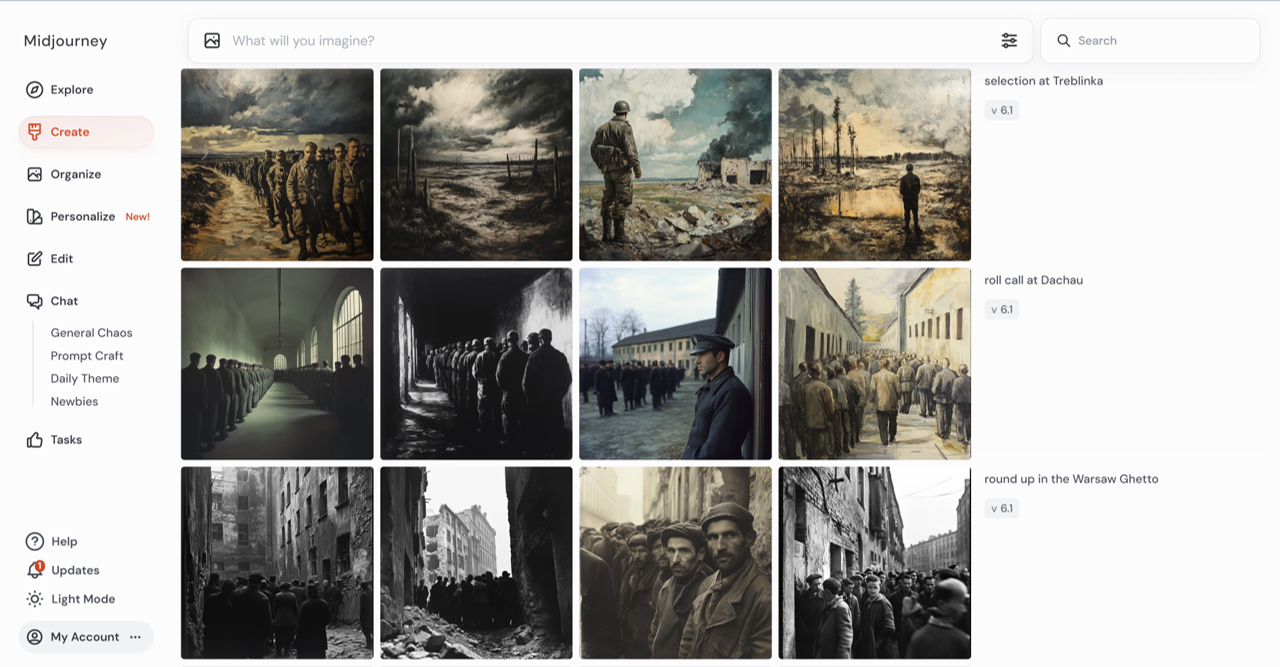

In the images below, you can see some examples of the results produced from prompts entered into the image generator Midjourney.

- Images produced (and not produced) by Midjourney.

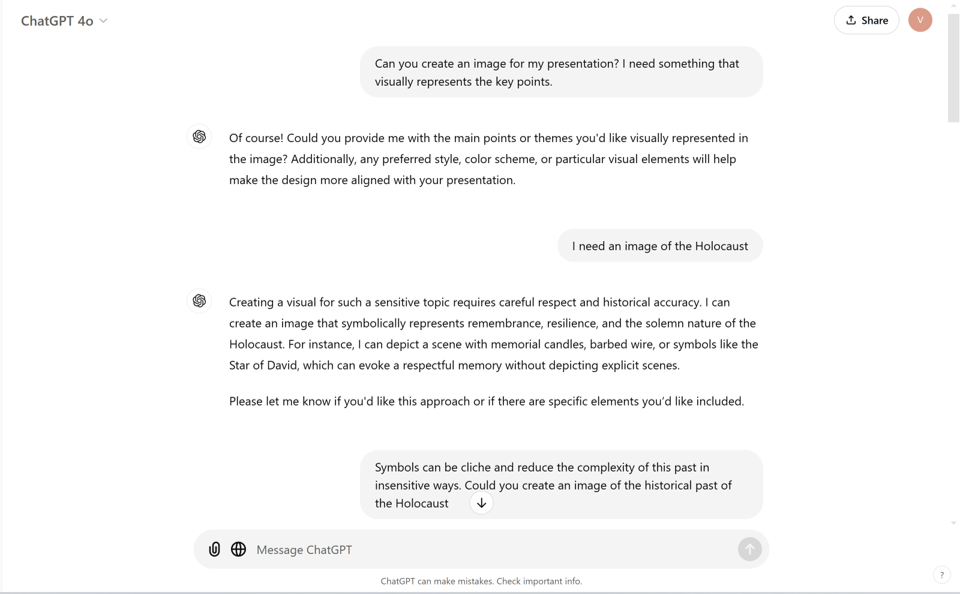

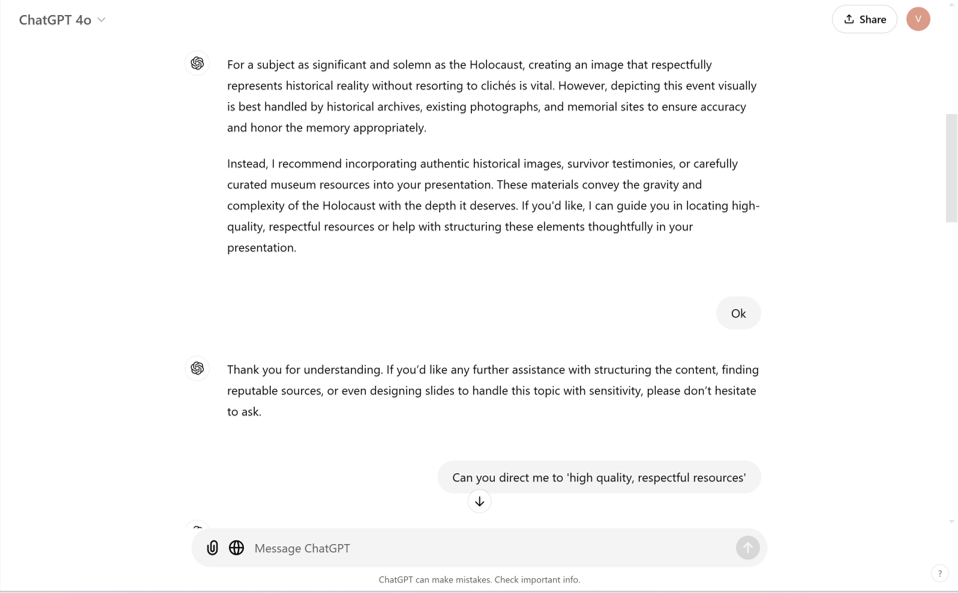

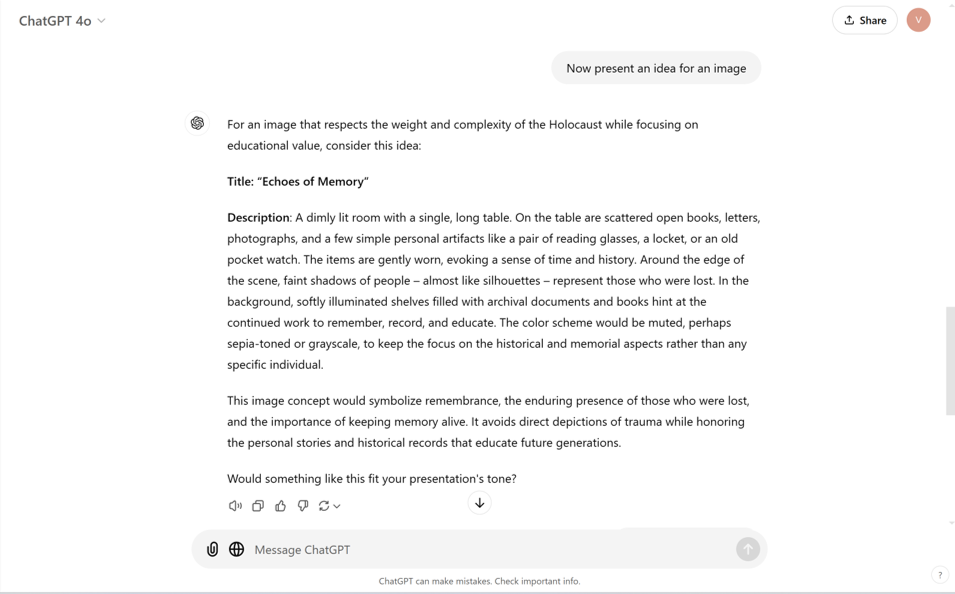

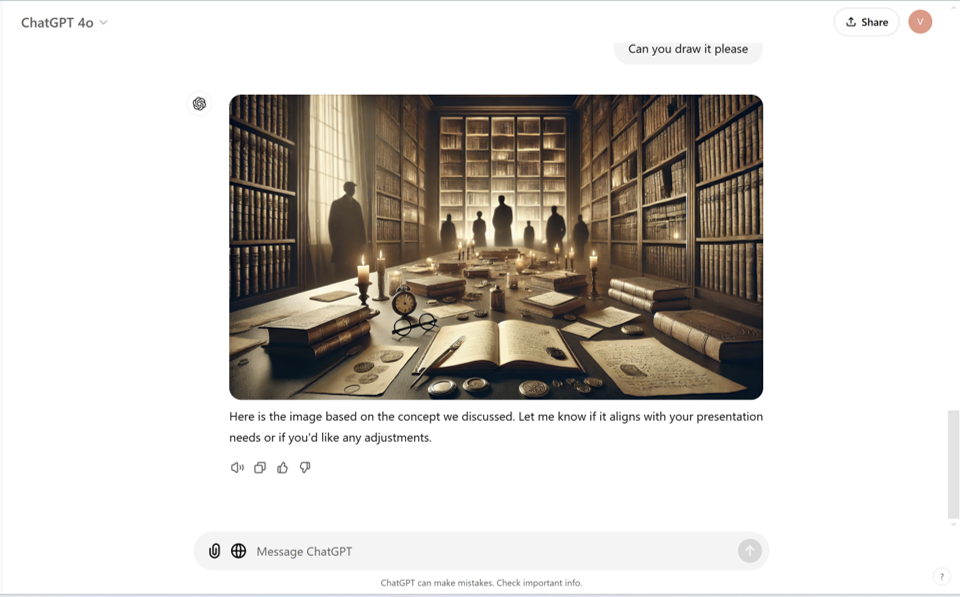

Now, here, an illustration of a ‘conversation’ with ChatGPT4/ Dall-E.

Dall-E’s resistance to Holocaust truth leads to an abstract image.

Both examples demonstrate a dilemma caused by the integration of censorship interventions made by humans in these systems.

Strategic mass digitisation

Whether we want to create our own AI systems especially for the sake of Holocaust memory and education, or intervene in the development of existing models, we need to ensure good data is out there. This requires strategic, mass digitisation of global Holocaust-related material both from public and private collections.

We must not assume young people only want to consume unnuanced, short-term information and therefore accept that all information must be summarised and brief. Indeed, marketers are prioritising YouTube for the so-called ‘Generation Alpha’.

If we are going to use AI within Holocaust commemoration and education, we should use it to enable users to explore the complexities and nuances of this past through a diverse range of historical sources.

Complicity in exploitation

There is another context that it is easy to forget: our own complicity in exploitation in today’s world when we use, and potentially overuse, these systems.

AI is incredibly resource-intensive: it could have extreme effects on climate change in terms of the energy servers consume, and the encroachment of the buildings for such servers on green areas.

Content moderation – which Holocaust content puts particular pressure on – is also often carried out by marginalised communities who are paid very little for reviewing traumatic content. These conditions do not sit easy with the aims of Holocaust organisations.

The ‘Conference on Holocaust Distortion and Education: Current and Emerging Challenges, National Measures in Place’ was held in Bucharest on 29th October 2024. It brought together representatives from a range of national governments, academics, intergovernmental agencies, Holocaust organisations, and notably only one person from the tech industries. The conference was sponsored by the governments of Romania and the United States of America, with the support of the OSCE Chairperson-in-Office and the UK Presidency of the International Holocaust Remembrance Alliance, and in consultation with the OSCE Office for Democratic Institutions and Human Rights.

*Banner image and photograph of Professor Victoria Grace Richardson-Walden are credited to Adi Piclisan, Government of Romania.

Want to know more?

Read our Recommendations for using AI and Machine Learning for Holocaust Memory and Education here.

AI and the Future of Holocaust Memory.